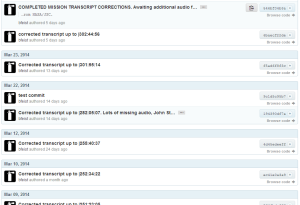

I can’t tell if I’m the tortoise or the hare, but March 31st was a big day. My github logs show that I started the manual step of the transcript correction process December 1/2012, and completed it March 31/2014 (with big summer breaks in there). There are still many hours of missing audio material, but I’ve been in touch with the Johnson Space Center, Houston Audio Control Room and they’ve been very responsive and helpful. They assured me that the missing material was on their list of to-dos, and they’re aiming to start getting the missing material to me within the next few weeks. It feels good to get to the next step. I don’t need the missing material to begin post-processing of the corrected material. As new audio becomes available, it will mean more manual correction, then the re-running of the automated post-processing actions I’m about to write.

I can’t tell if I’m the tortoise or the hare, but March 31st was a big day. My github logs show that I started the manual step of the transcript correction process December 1/2012, and completed it March 31/2014 (with big summer breaks in there). There are still many hours of missing audio material, but I’ve been in touch with the Johnson Space Center, Houston Audio Control Room and they’ve been very responsive and helpful. They assured me that the missing material was on their list of to-dos, and they’re aiming to start getting the missing material to me within the next few weeks. It feels good to get to the next step. I don’t need the missing material to begin post-processing of the corrected material. As new audio becomes available, it will mean more manual correction, then the re-running of the automated post-processing actions I’m about to write.

Immediate next steps:

- Write a python script to error check the transcript by looking for out of order timestamps.

- Write a python script to flip the MET to GET (the 2 hour 40 minute launch delay correction) at the right epoch in the mission

- Enact timecode change within the premiere project by moving the timing of each clip after the epoch up by 2:40 (or possibly overlaying a second timecode to show the different between corrected and elapsed. I have to talk to some people about what is best for posterity).

Next big steps:

- Create a script that renders the corrected transcript data into Apollo Flight Journal HTML format, and work with AFJ to get Apollo 17 online.

- Create video playback solution of the premiere segments for the entire mission.

- Create link between video playback and HTML transcripts. Allow jumping within the transcript by scrubbing video. Allow scrubbing of video by clicking a timecode within the transcript.

This project is awesome Ben!