Now that the TEC and PAO transcript data is in pipe-delimited CSV format, I can start to use batch cleansing techniques to further clean the raw OCR output data (CSV) into 2nd phase cleaned CSV. These processes are all automated tasks with no manual intervention. Once again, I did this purposely to keep the string of steps automated all the way from the original OCR steps to the cleaned output CSV in case I ever need to go back and change one of my earlier OCR settings etc. Any manual changes to the data would be wiped out by re-exporting any of the earlier steps.

Choosing Python

Python was never in my stable of languages back when I was a full-time hands-on developer earlier in my career. It’s a bit of a newcomer, and to a coder like me it feels like Perl with a whole slew of PHP-like features making it a great choice for automating content clean-up. I won’t go too much into detail about Python, but I do think it’s cool that it forgoes using braces to establish code structure and instead uses indentation itself. Forced visual organization–I like it.

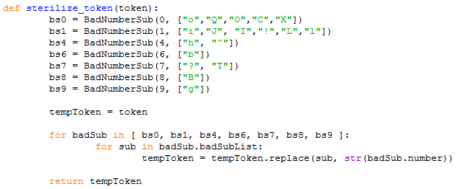

For example, there’s a little routine that someone in the Spacelog project wrote to clean up transcript timecode tokens. I used this routine against the TEC transcript and it worked perfectly. Here’s an excerpt from it:

It takes a timecode in the format 00 00 00 00 (day, hour, minute, second) and uses a list of possible OCR errors for each digit and replaces any of those characters with the corresponding digit. For example, if you find a “B” in the timecode, then it should have been the number “8”.

The process of running a routine like this against the Raw CSV and outputting a Cleaned CSV makes thousands of changes to the transcript without any manual intervention. As I added new cleaning routines to the Python script I improved the cleanliness of the transcript. Some of the Python cleaning functions I wrote are:

- Counting page numbers to make sure no pages were skipped in the OCR step

- Look for verbiage rows with no callsign that start with “Tape” and treat them as page metadata

- Look for callsign OCR errors by checking each callsign against a list of known speakers (callsignList = [ “LAUNCH CNTL”, “CAPCOM”, “PAO”, “SC”, “AMERICA”, “CERNAN”, “SCHMITT”, “EVANS”, “CHALLENGER”, “RECOVERY”, “SPEAKER”, “GREEN”, “ECKER”, “BUTTS”, “JONES”, “HONEYSUCKLE” ])

- Look for out of order timestamps. I found thousands (more on this later)

- A complex one: check if the first entry on a given page has no callsign but isn’t a Page number or Tape number. This is a continuation of an entry on the previous page. Concatenate the content into the previous page’s last entry contiguously. This one found hundreds of wrapped verbiage and fixed them.

Drawing the Line from OCR to Phase 2

At some point in the middle of writing and running these Python routines, slowly making the output cleaner and cleaner, I decided to stop using the OCR output CSV as the source. I drew a line in the sand that meant from this point forth I would be making manual changes to the cleaned TEC CSV file and would no longer export to CSV from FineReader. This next step meant making a “Cleaned CSV Phase 2” file. In other words, the input would now be the “Cleaned CSV” that contained partially Python processed material based on the OCR output, and the output would be a new “Cleaned CSV Phase 2” file.

Simple Search and Replace

Making the move to Phase 2 cleaning allowed me to perform a series of carefully worded search and replace functions on the Cleaned CSV file. These included common OCR errors in the Tape titles for example, and easily identifiable errors that were common in various verbiage entries. Using “replace all” often resulted in thousands of corrections per action, slowly but surely making the content cleaner.

Timestamp Token Transform

This phase 2 process was where many of the Python cleaning routines were written (from the bullet list above). One big scripting item that was accomplished in Phase 2 was my decision to convert the timestamp tokens from 00 00 00 00 format to 000:00:00 format. This 2nd format is hours:minutes:seconds and is referred to as GET , or Ground-Elapsed Time in the mission audio. It would be easy to convert back again if need be for a given output format, but for my purposes I wanted GET because that’s what the PAO transcripts use and what the astronauts themselves used when they spoke throughout the mission. I also wrote a routine to fill in missing timestamps by simply copying the last known timestamp onto every line that was missing one. This is an interim step to the final cleaned output but makes every transcript row a complete record.

The resulting CSV can be found here.

My thoughts then turned to how to address the timestamp issues as a whole. Some timestamps are approximate guesses, many are missing, and history is blurry in this regard. I slowly realized that to establish a true mission timeline I would have an even larger task ahead than simple transcript restoration could address.